Why Do Multi-Node Request Flows Show Different Timing Patterns on Identical Tasks?

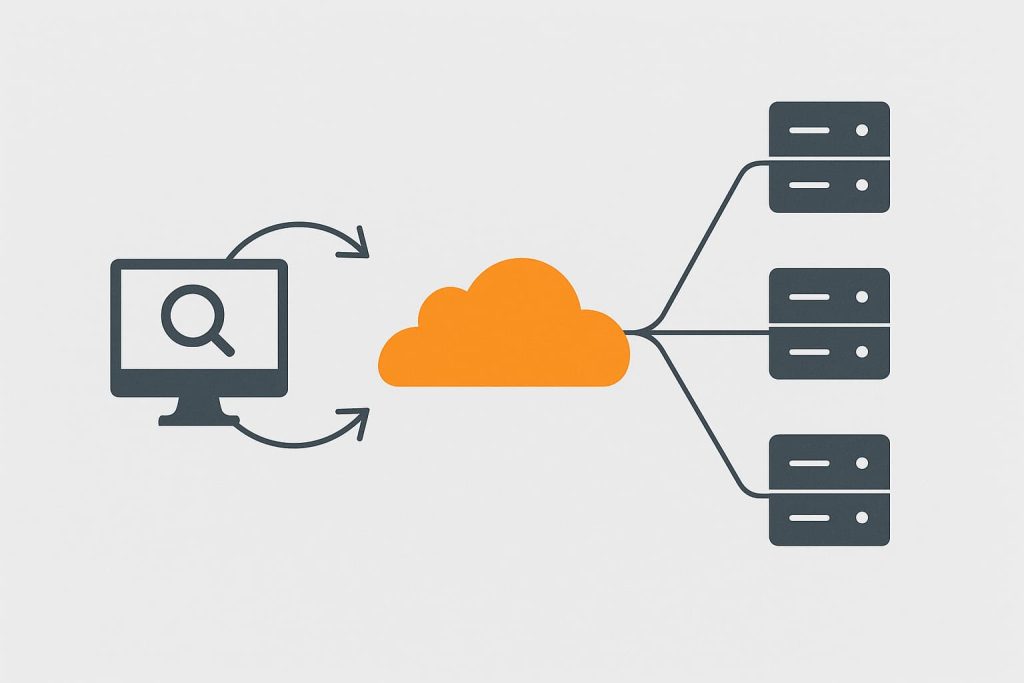

Picture a workflow where the same task is sent through multiple nodes — maybe a distributed crawler, a multi-exit proxy pool, or a region-aware request scheduler.

Each node receives identical instructions, identical payloads, identical endpoints, identical headers.

Yet the timing tells a different story.

One node finishes the task in milliseconds.

Another hesitates briefly.

A third displays a subtle stagger across phases — handshake, first-byte, content transfer, or completion.

Same job.

Same system.

Different timing.

This isn’t random noise.

Multi-node timing divergence is the natural outcome of layered infrastructure, routing diversity, and micro-level conditions that shape each node’s unique execution pattern.

CloudBypass API observes these patterns in detail and helps developers interpret what’s really happening beneath the surface.

1. Each Node Lives Inside Its Own Timing Environment

Even nodes within the same pool operate under different micro-conditions:

- local CPU pressure

- regional internet weather

- upstream carrier behavior

- queue rotation intervals

- subtle hardware differences

These factors shape timing behavior on a per-node basis long before traffic even leaves the proxy.

2. Routing Divergence Creates Natural Timing Spread

Two nodes may exit from the same city yet travel completely different upstream routes.

Those routes differ in:

- hop count

- congestion windows

- pacing rules

- packet scheduling behavior

- path fairness algorithms

This creates timing drift even when the total latency looks similar.

CloudBypass API monitors these divergences through path-level timing signatures.

3. Cache Health Varies by Node

Even in synchronized systems, caches diverge:

- some nodes hold warm objects

- others refresh or revalidate

- some run eviction cycles sooner

- others fall behind in sync

Thus identical requests don’t receive identical timing advantages.

4. Node-Level Load Is Not Evenly Distributed

Workload distribution rarely stays perfectly balanced.

A node may receive:

- a sudden micro-burst

- a heavier adjacent client

- a brief internal process

- metadata update cycles

These spikes are brief but sufficient to distort timing patterns on identical tasks.

5. Nodes Evolve at Different Speeds

Software patches, kernel updates, and internal tuning are often rolled out progressively:

- new TCP stacks

- improved pacing modules

- experimental scheduling tweaks

- updated inspection layers

A single updated module can change the timing fingerprint noticeably.

6. Connection Reuse Conditions Differ Across Nodes

Some nodes reuse:

- DNS resolution

- connection pools

- TLS tickets

- routing hints

- ephemeral state traces

Others start from cold conditions.

Cold start vs. warm state alone can widen timing differences dramatically.

7. Region and Sub-Region Behavior Shapes Execution

Even within a single country, nodes may exist on:

- different carriers

- different peering agreements

- different metro fiber systems

- different load policies

Region clusters form micro-ecosystems, each shaping timing uniquely.

8. Timing Alignment Drifts Differently at Each Node

Nodes fall out of sync at different rates due to:

- queue rollover

- clock synchronization variance

- jitter compensation

- handshake pacing shifts

These tiny alignment differences accumulate into visible timing patterns.

CloudBypass API maps this drift and highlights where timing symmetry breaks.

9. Why Identical Tasks Don’t Produce Identical Curves

Multi-node flows operate across independent timelines.

Even if tasks match perfectly on paper, real-world execution reflects the node’s unique environment:

- internal load

- routing path

- cache state

- warm vs. cold conditions

- timing alignment window

Thus the timing curves diverge naturally.

Identical tasks do not guarantee identical timing because each node operates within its own micro-reality.

Routing variation, cache health, hardware cycles, region conditions, and timing alignment all shape the final behavior.

CloudBypass API brings clarity to this complexity by exposing path-level timing drift, node-based irregularities, and the hidden forces behind multi-node divergence — transforming confusing timing patterns into actionable insight.

FAQ

1. Why are multi-node timings inconsistent even when tasks are identical?

Because each node has unique load, routing, cache states, and timing alignment.

2. Does higher latency always mean a weaker node?

Not necessarily — timing drift can occur without affecting average latency.

3. Can routing alone cause large timing differences?

Yes. Even small path variations can reshape handshake and fetch timing.

4. Why do some nodes feel “warm” while others feel “cold”?

Warm nodes reuse prior state; cold nodes rebuild everything from scratch.

5. How does CloudBypass API help?

It compares timing fingerprints across nodes, revealing drift, hidden slow paths, and region-based performance variation