Proxy Pool or Direct Routing: Which Reduces Response-Order Shifts More Effectively?

Imagine you’re running a workload where response order matters.

You’re fetching batches of small requests, expecting them to come back in roughly the same sequence they were sent.

But sometimes, one batch behaves beautifully — smooth, well-ordered, predictable.

Next batch? A handful of responses jump ahead, a few stall, and suddenly the whole sequence looks scrambled.

When this happens, developers often ask:

“Should I switch to a proxy pool, or stick with a single direct route for stability?”

This article breaks down how each method affects response-order stability, why they behave differently under changing network conditions, and how CloudBypass API helps measure these subtle timing patterns.

1. Direct Routing: The Promise of Predictable, Single-Path Behavior

Direct routing funnels all requests through one stable path.

When that path is healthy, you get:

- consistent timing

- stable round-trip variance

- predictable handshake behavior

- tight ordering on sequential requests

If your region → server route stays clean, direct routing often delivers near-perfect response order.

But stability breaks the moment any of these change:

- transient congestion

- micro-burst delays

- pacing jitter

- node-level imbalance

A single-path route has no fallback.

If it slows down for even a second, everything slows with it.

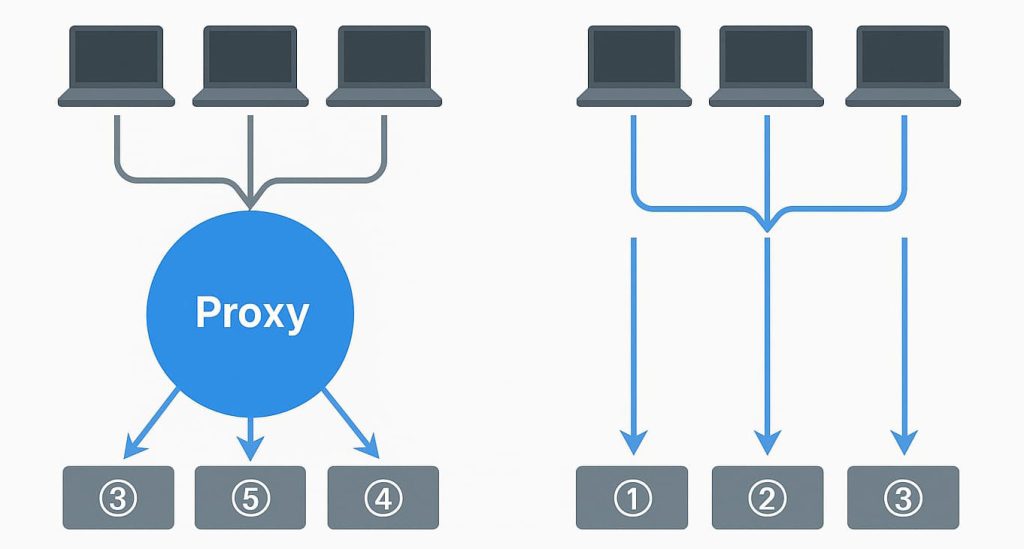

2. Proxy Pools: Dynamic, Adaptive, More Resilient — But Not Always More Stable

Proxy pools distribute requests across multiple exit nodes.

This provides advantages such as:

- bypassing temporary slow regions

- smoothing out local congestion

- avoiding stuck or degraded paths

- increasing total throughput

But they also introduce:

- multi-route timing differences

- per-exit negotiation variance

- handshake diversity

- per-node load fluctuations

This means response order can shift because different nodes perform differently at the same moment.

A proxy pool is like having multiple runners on a track — the average speed increases, but the finishing order becomes less predictable.

3. Why Direct Routing Maintains Better Sequence Consistency

The key reason is path determinism.

Direct routing keeps:

- one edge entry

- one transit pattern

- one handshake context

- one regional pacing strategy

Even when timing fluctuates, it fluctuates in sync for all requests.

Thus, response-order stability remains high because all requests share identical constraints.

4. Why Proxy Pools Can Reduce Order Stability but Increase Overall Success Rates

Proxy pools prioritize availability and resilience, not strict sequential timing.

Because each request might exit from:

- a different region

- a different node cluster

- a different latency bubble

- a different pacing heuristic

the system gains robustness but loses sequence-level predictability.

This is why high-frequency crawlers often see:

- better access success

- lower failure rates

- fewer stuck connections

- but more response reordering

It’s a tradeoff.

5. The Hidden Layer: Node-Load Variance

Proxy nodes are not equal.

Some nodes:

- warm caches faster

- negotiate TLS more efficiently

- sit closer to certain endpoints

- handle burst traffic smoother

Others might be:

- colder

- busier

- slower to respond

- temporarily load-balanced differently

This unevenness is the core reason proxy pools cause response-order drift.

6. Regional Impact: Geography Changes Everything

Direct routes remain consistent only when your region → server path is stable.

Proxy pools mitigate regional instability by offering multiple paths — but they also make timing less deterministic because each region interacts differently with each exit node.

CloudBypass API captures this drift with per-region timing signatures.

7. When Proxy Pools Actually Improve Order Stability

Interestingly, in some cases proxy pools outperform direct routing:

- when local routing is unstable

- when you hit region-specific congestion

- when direct routes suffer timing bursts

- when your ISP has pacing issues

By switching between healthier nodes, proxy pools can restore order stability that direct routing loses.

This effect is most noticeable in congested or inconsistent networks.

8. CloudBypass API Helps You Measure Which Method Fits Your Use Case

CloudBypass API doesn’t control routing,

but it reveals the timing mechanics behind each route, including:

- per-node latency drift

- handshake-phase variance

- region-based pacing differences

- internal ordering correlation

- micro-jitter signatures

With these metrics, developers can finally answer:

“Which system gives me the most consistent output for my workload?”

Not hypothetically — but empirically.

FAQ

1. Why do proxy pools sometimes create more response-order drift?

Because each request may exit through a different node with its own pacing behavior, congestion level, and handshake signature. This variability improves resilience but reduces deterministic ordering.

2. Why can direct routing still fail to maintain sequence order sometimes?

Direct routing has no fallback paths. If the single route experiences a micro-burst, jitter spike, or node imbalance, the entire sequence is affected at once.

3. Is response-order stability more dependent on geography or node behavior?

Node behavior dominates. Even in the same region, different nodes show different timing curves. Geography matters, but node variance is the bigger driver.

4. Can proxy pools ever outperform direct routing in maintaining order?

Yes — especially in unstable regional networks. When one direct route becomes inconsistent, proxy pools can stabilize order by switching to healthier nodes.

5. How does CloudBypass API help determine which method is better for my workload?

CloudBypass API maps timing drift across nodes, regions, and request phases, so you can see which routing pattern produces the fewest sequence disruptions for your specific traffic profile.

Choosing between a proxy pool and direct routing isn’t about which one is “better.”

It’s about which one aligns with your workload’s priorities:

Use direct routing when you need:

- strict response-order consistency

- predictable timing

- minimal route variance

Use proxy pools when you need:

- higher availability

- resilience against traffic waves

- regional adaptability

- better success rates under stress

Each method solves a different problem — and response-order stability depends on how your workload interacts with real-world routing behavior.

CloudBypass API helps illuminate these differences so you can choose the right strategy based on actual timing data, not guesswork.