What Makes Data Retrieval Behave Differently on Sites Using Layered Filtering Systems?

Imagine you’re on a site that loads structured data — maybe product listings, search results, game statistics, or policy documents.

You click something simple, like “Show more,” and the page loads instantly.

Later that day, you click the same button…

but now it hesitates.

Sometimes the data arrives in pieces.

Sometimes it loads out of order.

Sometimes the site asks for an extra confirmation step before continuing.

Nothing about your device changed.

Nothing about your browser changed.

Not even the exact request changed.

What did change was the path your request took through the site’s layered filtering system — a mix of traffic classifiers, sequencing validators, and resource prioritizers that quietly decide how to deliver your data.

This article explores why data retrieval behaves differently on such systems .

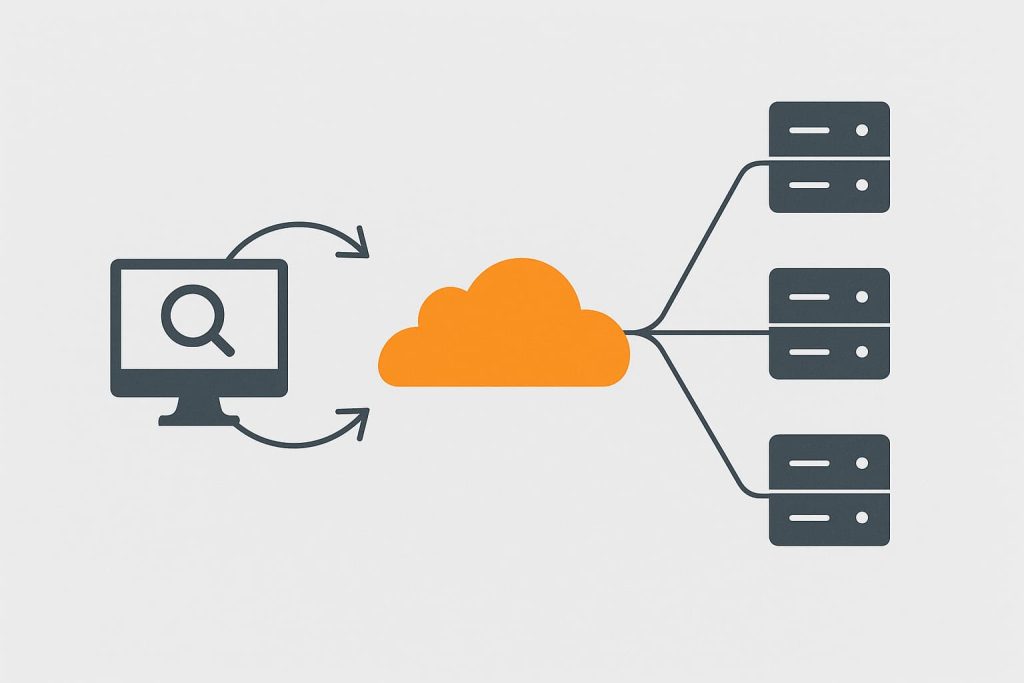

1. Layered Filtering Systems Don’t Follow a Single Processing Path

Modern platforms use multi-layer filters to organize, validate, and throttle requests.

A typical system might include:

- a global traffic classifier

- a regional load balancer

- a behavior-scoring module

- a data-access governor

- a sequencing validator

- a freshness/consistency checker

Your request may travel through:

Path A (light) → almost instant

Path B (medium) → small checks, slight delay

Path C (strict) → deeper inspection, more sequencing steps

The request looks the same — but the system doesn’t see it as the same.

2. Micro-Variations in Navigation Patterns Change Filter Decisions

Filtering systems often evaluate not the request itself, but the context around it.

Seemingly normal browsing behaviors can push a request into different categories:

- clicking too quickly after a heavy data load

- repeatedly asking for similar data in bursts

- jumping between related resources at high speed

- revisiting cached pages that no longer match server state

None of this is suspicious to a human.

But to a layered filtering engine, these signals alter the risk score or processing priority.

This is why the “same click” may feel fast one moment and sluggish the next.

3. Data Retrieval Speed Depends on Which Internal Cache Tier You Hit

Many layered systems use multiple cache levels:

- hot cache (super fast)

- warm cache

- cold cache

- uncached (direct database retrieval)

Factors that influence which one you hit:

- regional load

- recent queries from nearby users

- whether your request shape resembles a known pattern

- internal cache-rotation schedules

- backend synchronization cycles

If you land on a cold tier, your request may feel dramatically slower — even though the request didn’t change at all.

4. Sequence Validation Adds Unpredictable Delays

Layered systems must ensure that:

- data is shown in the correct order

- dependencies load in the correct order

- the user session remains consistent

- stale responses don’t overwrite fresh ones

When timing becomes irregular, the validator may:

- reorder flows

- retry phases

- wait for slower segments to catch up

- downgrade or reclassify the request

This creates visible stagger, even when nothing is technically “wrong.”

5. Behavior Profiling Adapts Based on Regional or Temporal Conditions

Many systems dynamically adjust thresholds depending on:

- traffic density

- regional resource load

- known scraping patterns

- recent inconsistencies in user flows

- backend synchronization windows

Meaning a request that passes smoothly in the morning may travel a stricter path during a high-load period.

The system hasn’t changed —

the context around your request has changed.

6. Network Rhythm Affects Filter Layer Decisions More Than Raw Speed

Filtering systems often consider:

- packet spacing

- request rhythm

- concurrency bursts

- timing jitter

- early/late arrival sequences

Fast but unstable connections may receive stricter processing.

Stable but slower connections may glide through the light path.

This is why some users report:

“Fiber feels slow today, but mobile data feels smooth.”

The site isn’t inconsistent — the filtering layers are reacting to timing differences.

7. Where CloudBypass API Helps

Layered filtering systems operate invisibly.

Developers cannot easily see:

- which path a request took

- why a retrieval phase stalled

- why data arrived in a different order

- why a normally light request triggered deeper checks

- which routing or timing factor influenced classification

CloudBypass API offers visibility into:

- timing drift at each retrieval phase

- regional path variation

- sequence irregularities

- cache-tier differences

- request-pattern scoring changes

- temporal load effects

it clarifies why filtering behaved differently this time.

Data retrieval behaves differently on layered filtering systems because:

- request context shifts

- timing rhythm changes

- caches evolve

- backend coordination fluctuates

- classification models adapt

- resource tiers rotate

To users, it feels random.

To the system, it’s deliberate.

CloudBypass API helps developers illuminate the hidden factors behind these variations, transforming confusion into actionable understanding.

FAQ

1. Why does the same data request load quickly one moment and slowly the next?

Because the filtering system may route it through a different internal path depending on timing and context.

2. Does this mean the system thinks I’m doing something suspicious?

Not necessarily — many routing changes are about efficiency, not security.

3. Why do cache tiers affect my experience so much?

Hot cache is extremely fast, while cold-tier retrieval requires deeper backend access.

4. Do network conditions matter?

Yes — timing rhythm, not bandwidth, heavily influences path selection.

5. How does CloudBypass API help?

It provides insight into timing drift, retrieval path changes, and internal sequence behavior.