Why Do Issues Become Harder to Diagnose When Execution Paths Lack Visibility, and What Does Observability Actually Solve?

A job slows down, but nothing “fails.”

A batch finishes, but the output is missing pieces.

Retries spike, but logs look clean.

Two nodes run the same task, yet one drifts and nobody can explain why.

That is the real pain: not the issue itself, but the inability to prove where it starts.

Mini conclusion upfront:

When the execution path is invisible, every symptom looks like the root cause.

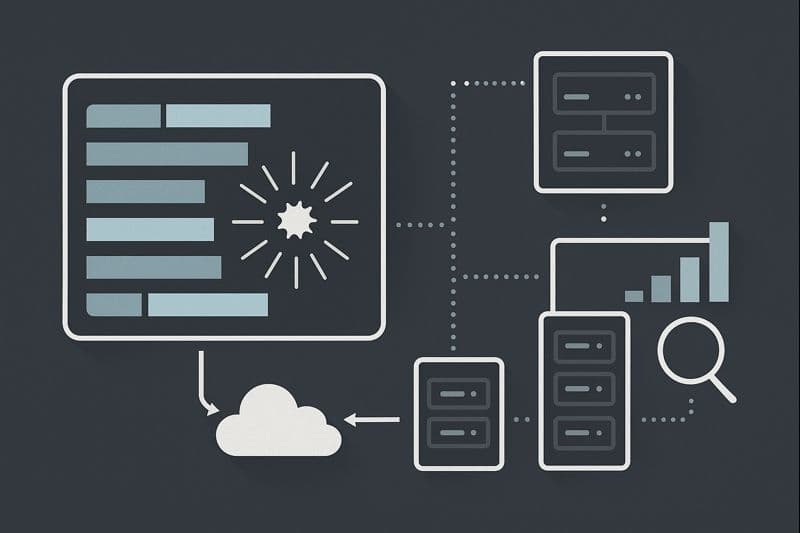

Observability turns guesswork into a timeline.

A timeline is what lets you fix the right stage instead of treating everything as “network problems.”

This article solves one specific problem:

why invisible execution paths make diagnosis harder, and what observability actually changes in day to day operations.

1. What “Lack of Visibility” Really Looks Like in Real Systems

1.1 You Only See the Start and the End

In many pipelines, you can see:

request sent

response received

status code

total latency

But you cannot see the middle.

You do not know:

where time was spent

which sub stage stalled

whether a retry happened inside a dependency call

which node path introduced jitter

which step created an ordering gap

Without the middle, diagnosis collapses into speculation.

1.2 Symptoms Become Misleading

When a system lacks visibility, teams often blame the wrong thing.

Examples:

a slow response is blamed on the target site, but it was DNS variance

a failure is blamed on a node, but it was a retry storm upstream

a partial output is blamed on parsing, but it was missing pages caused by drift

a “random” slowdown is blamed on traffic, but it was queue pressure inside the scheduler

Invisible paths create false narratives.

2. Why Problems Become Harder to Diagnose Over Time

2.1 Long Runs Hide Slow Degradation

Short tests rarely show structural decay.

Long runs expose it:

health slowly drops on specific nodes

tail latency grows

retry cost rises

ordering gaps accumulate

success rate decays gradually

If you only watch totals, you notice the decline late, when it is expensive to recover.

2.2 Parallelism Creates Many Possible Failure Points

In multi worker execution, a symptom can originate from:

one weak node

a noisy path

a congested dependency

a scheduler imbalance

a single stage backlog

Without observability, you cannot locate which lane caused the slowdown.

So teams over correct:

they reduce concurrency globally

they rotate nodes blindly

they restart jobs unnecessarily

they widen timeouts instead of fixing the bottleneck

This “fix” often makes performance worse.

3. What Observability Actually Solves, in Practical Terms

3.1 It Builds a Stage by Stage Timeline

Observability turns a request into a sequence:

DNS

connect

handshake

first byte

transfer

parse

downstream calls

retry logic

queue wait

task completion

When something slows down, you can answer:

which stage changed

when it started

how often it repeats

whether it correlates with node choice or concurrency

3.2 It Separates Root Cause From Amplifiers

A small issue becomes a large problem because of amplifiers.

Common amplifiers:

retry storms that multiply load

queue pressure that delays everything

tail latency that blocks batch completion

node oscillation that destabilizes timing

Observability shows what is root cause and what is amplification.

Without that, teams often treat amplifiers as the cause, and nothing improves.

3.3 It Makes “Normal” Measurable

Most teams do not know what normal looks like.

Observability defines baselines:

typical stage latencies

typical variance

expected retry rates

expected queue depth

normal node health range

Once baselines exist, anomalies become obvious and actionable.

4. The Most Valuable Signals to Capture

4.1 Stage Latency Breakdown

Total latency is too coarse.

You need stage latency.

Key stages:

DNS time

connect time

handshake time

first byte time

transfer time

parse time

downstream call time

queue wait time

4.2 Retry Shape and Backoff Behavior

Count alone is not enough.

You need the shape:

how quickly retries happen

whether backoff grows

whether retries cluster on a node

whether a failing route keeps receiving traffic

4.3 Node and Route Health Over Time

Observability must track drift:

timing drift per node

success rate per node

tail latency per node

stability under concurrency

This is how you detect deterioration before it becomes an outage.

4.4 Ordering and Sequencing Integrity

Many pipelines fail quietly through sequence breaks:

missing pages

out of order results

skipped cursors

partial chains

Observability must detect these, or output quality collapses silently.

5. A New User Friendly Example You Can Copy

You do not need a complicated platform to start.

A practical approach:

tag every task with a trace id

record timestamps at each stage boundary

store node id, route id, and concurrency level

record retry count plus retry spacing

alert on stage drift, not only total latency

When a slowdown happens, you can answer in minutes:

which stage moved

which node is responsible

whether retries amplified it

whether it is regional or local

This prevents blind tuning and wasted restarts.

6. Where CloudBypass API Fits Naturally

CloudBypass API is valuable in observability because many access problems are timing problems.

It helps expose:

phase by phase latency patterns

node level timing drift

route variance across regions

burst irregularities under concurrency

signals that predict instability before failures spike

Instead of treating access as a black box, teams get a measurable execution path.

That changes operations:

less guessing

fewer blanket reductions in concurrency

faster isolation of unhealthy routes

more stable long running performance

Issues become harder to diagnose when execution paths lack visibility because you cannot build a timeline.

Without a timeline, symptoms masquerade as causes, and fixes become random experiments.

Observability solves this by turning work into measurable stages, defining baselines, and separating root causes from amplifiers.

Once you can see the path, stability becomes an engineering problem, not a guessing game.